Don’t Underestimate NVIDIA’s AgentIQ

AI model development has accelerated significantly in the last six months, driven by three scaling laws: pre-training scaling, post-training scaling, and inference-time scaling. These laws enable continuous enhancement of model capabilities by adding more compute, with no apparent limit in sight.

For instance, models like Claude 3.7 and DeepSeek v3 demonstrate improved performance and plummeting costs, while OpenAI’s models show that longer inference times and searches yield better results.

This cost reduction is expected to increase demand, following Jevons' Paradox, where efficiency gains lead to higher consumption. NVIDIA GTC's focus emphasizes addressing these scaling paradigms to drive an "explosion in intelligence and tokens," suggesting a future where AI capabilities expand rapidly.

As AI agents are quickly becoming a core part of how artificial intelligence is applied in practical situations. NVIDIA is actively developing this area, enabling AI agents to independently perform tasks that require reasoning, planning, and executing decisions with minimal human input.

AI agents are simply systems capable of "reasoning, planning, and executing complex tasks based on high-level goals."

These agents differ from traditional AI models because they're designed to operate autonomously, using several key technologies:

- Large Language Models (LLMs): To interpret commands and generate human-like responses.

- Memory Modules: Short-term memory for recent tasks and long-term memory for historical data.

- Planning Modules: Tools like Chain of Thought, ReAct, or Reflexion, which help agents structure their decision-making processes.

- External Tools: Integration with APIs, databases, and Retrieval-Augmented Generation (RAG) systems for accessing external information.

NVIDIA’s recently announced AgentIQ, an open-source Python library specifically designed to simplify AI agent development. It allows developers to quickly set up agentic workflows through clear, straightforward Python code.

With AgentIQ, creating an AI agent involves defining tasks and workflows using intuitive Python functions and classes. This approach significantly lowers the complexity barrier, enabling enterprises to adopt AI agents without extensive specialized knowledge. Compared to alternatives, AgentIQ offers better integration with NVIDIA GPUs, efficient memory management, and scalable solutions suitable for large-scale enterprise deployments.

Key components of AgentIQ include:

- Tutorials and Blueprints: Step-by-step resources such as the AI-Q NVIDIA Blueprint, useful for creating practical applications like customer support bots or robotic automation.

- Comprehensive Documentation: Detailed guidance addressing challenges like memory handling, structured task planning, and performance optimization.

- Enterprise Advantages: Simplified agent deployment, seamless GPU integration, and enterprise-level scalability for handling large, complex tasks.

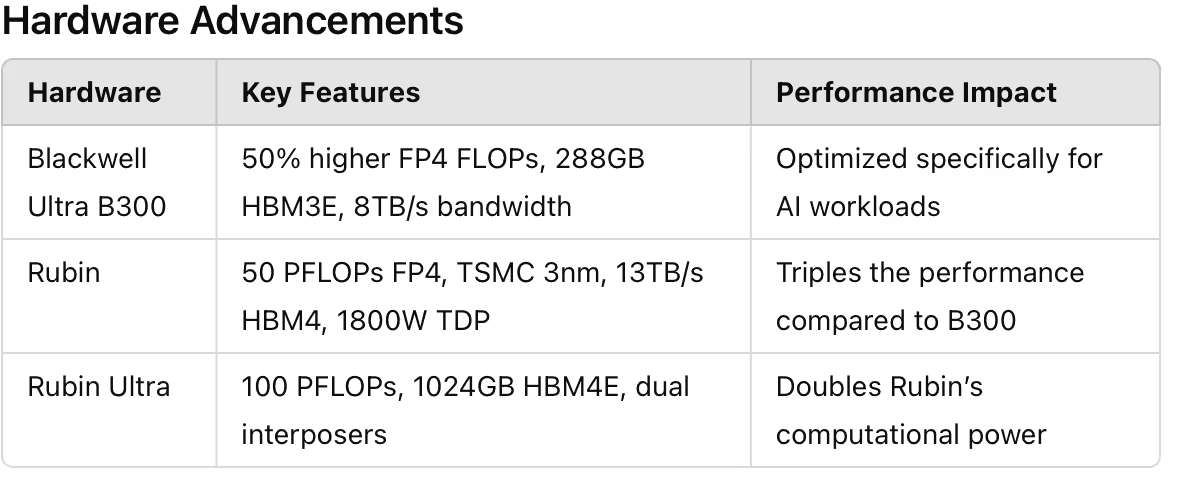

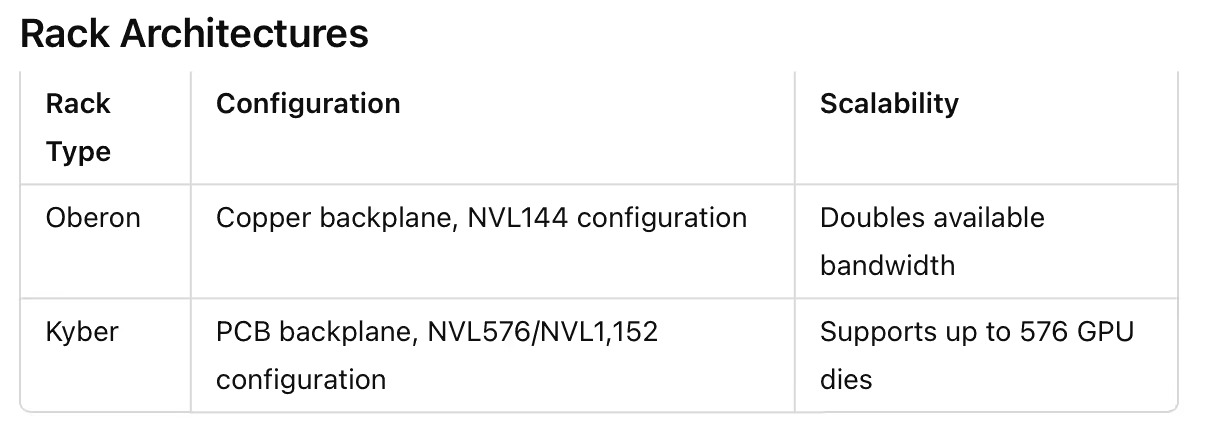

During NVIDIA’s GTC 2025 event, significant hardware innovations were introduced to support these AI agents effectively:

These hardware enhancements directly benefit the AI agents, enabling them to manage increasingly complex real-time operations and large-scale deployments.

In addition, software innovations introduced at GTC 2025, such as the Dynamo inference stack, provide additional support for AI agents:

- Smart Router: Efficiently distributes workloads across GPUs.

- GPU Planner: Dynamically adjusts GPU resource allocations.

- Improved NCCL Collective: Significantly lowers latency, crucial for real-time applications.

- NVMe KV-Cache Offload: Improves response times by efficiently managing cache storage.

Networking advancements also help optimize AI agent deployments. NVIDIA introduced Co-Packaged Optics (CPO) and high-capacity switches like the Quantum X-800 3400, enhancing network efficiency and reducing energy consumption for large AI clusters.

The Improved NCCL Collective reduces latency by 4x for smaller messages, enhancing throughput, while NIXL, a low-latency transfer engine using InfiniBand GPU-Async, simplifies data movement. NVMe KV-Cache Offload offloads less-used KV caches to storage, reducing recomputation and improving response times (e.g., 50-60% hit rate in multi-turn conversations).

Dynamo democratizes efficient inference, competing with tools like vLLM and SGLang, and significantly boosts throughput, especially for interactive use cases.

Real-world Applications and Industry Impact

AI agents supported by AgentIQ and NVIDIA’s hardware are already impacting various sectors:

- Customer Service: Automated handling of complex customer interactions.

- Healthcare: Improved disease diagnostics through AI-driven analysis.

- Finance: Autonomous analysis and execution of financial market strategies.

- Robotics: Independent operations of robots in changing environments.

Challenges and Future Considerations

Despite the potential, several challenges remain:

- Ethics and Safety: Ensuring responsible, unbiased operation of AI agents.

- Scalability: Meeting growing demands for computational power and infrastructure.

- Interoperability: Ensuring AI agents can seamlessly integrate with existing systems.

AgentIQ’s open-source model facilitates addressing these issues through collaborative community engagement, promoting interoperability and responsible innovation.

NVIDIA’s combined focus on advanced hardware, accessible software tools like AgentIQ, and optimized networking solutions positions it effectively for enterprise adoption of AI agents, offering practical advantages and enabling broader AI use across industries.

The Enterprise Advantage

AgentIQ makes it easier for enterprises to adopt agentic AI by providing tools like a configuration builder and universal descriptors, allowing quick prototyping and integration into existing workflows.

Its open-source nature fosters community contributions, potentially speeding up innovation. Additionally, it offers access to NVIDIA AI Blueprints, which are pre-built architectures for specific use cases, further reducing development time.

AgentIQ is optimized for NVIDIA hardware, particularly through NVIDIA Inference Microservices (NIMs), which ensure efficient AI inference on GPUs. This optimization reduces latency and compute costs, while features like real-time telemetry allow for dynamic performance improvements.

I believe NVIDIA AgentIQ is likely to become a leading strategy for enterprises adopting agentic development due to its ease of use, open-source nature, and optimization for NVIDIA hardware. It provides a competitive advantage by reducing development complexity, optimizing performance on NVIDIA GPUs, and integrating with cloud platforms like Azure AI Foundry for scalability.

Enterprises shouldn’t overlook NVIDIA AgentIQ. It’s more than just another AI framework—it’s a practical tool that helps teams get results faster. The open-source approach, ready-to-use blueprints, and GPU optimizations make development simpler and less expensive, making it easier to move quickly from testing ideas to actually getting things done.

Resources:

- NVIDIA AgentIQ Developer Page with benefits for enterprises

- NVIDIA Blog explaining what is Agentic AI and NVIDIA's role

- GitHub NVIDIA AgentIQ repository with hardware integration details

- Microsoft Azure Blog on accelerating agentic workflows with AgentIQ

- NVIDIA Blog on AI Agents Blueprint powered by AgentIQ

- NVIDIA Blog on Dynamo

.jpg)